Overlapping Computation & Communication with MPI Non-blocking Calls

Enable MPI shared memory optimizations

Link with DMAPP library with following link flags

Static linking:

Dynamic linking: Enable optimized DMAPP collectives

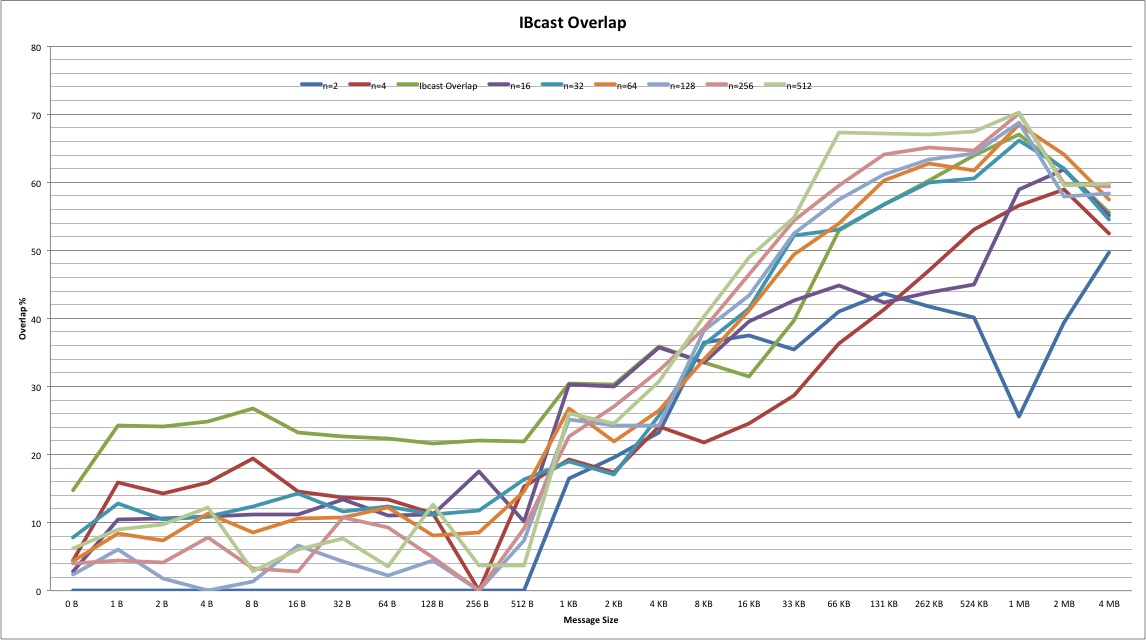

Results: The following results show overlap % for various message sizes and rank count

|

Skip to Content