Data Transfer

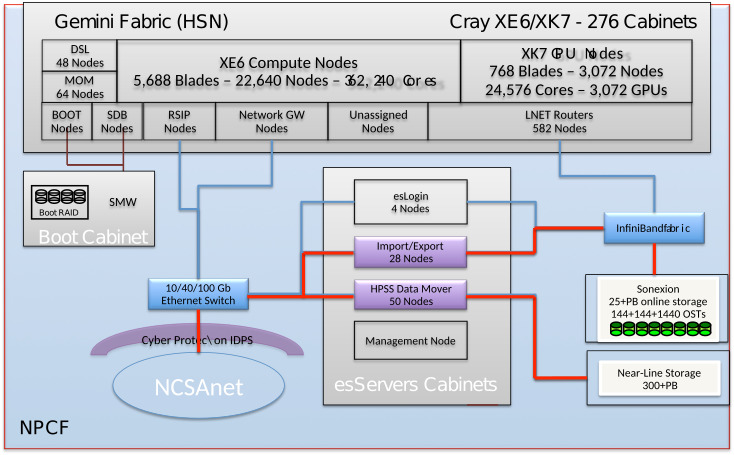

Please note: Globus Online is the supported, preferred method of moving files or groups of files of any significant size around the Blue Waters system or between Blue Waters and other facilties. Basically if it's not an operation that can be completed by the 'cp' command, use Globus Online for the transfer. GO has parallel access to all the data moving hardware on the system and can typically move data very quickly and efficiently. The Blue Waters prototype data sharing service is now available. This service allows allocated Blue Waters partners to share datasets generated on Blue Waters with members of their scientific or engineering community with two methods: web services for small files and Globus Online for large files. Please see the Data Sharing Service for more information. Particularly, do NOT do large transfers with programs like scp, sftp, or rsync. They clog up the login nodes and cause other user interactive shells to slow down. There are specific limits for how long single processes can be run on the Blue Waters login nodes, and programs violating those limits will be killed automatically. See the 2019 Blue Waters Symposium presentation on Nearline Data Retrieval for a recent discussion on retrieving data from Nearline during the final months of Blue Waters general availability. Globus OnlineDescriptionGlobus Online is a hosted service that automates the tasks associated with moving files between sites. Users queue file transfers which are then done asynchronously in the background. Globus Online transfers files between "endpoints" which are systems that are registered with the G.O. service. Blue Waters provides an endpoint to its filesystems (ncsa#BlueWaters) served by multiple Import/Export nodes optimized for performance with Globus Online (the equivalent archive storage endpoint is ncsa#Nearline). If you need to transfer files to or from a system that isn't yet registered as an endpoint (like your desktop or laptop), you register that system using Globus Connect. Please note following, enforced, guidelines:

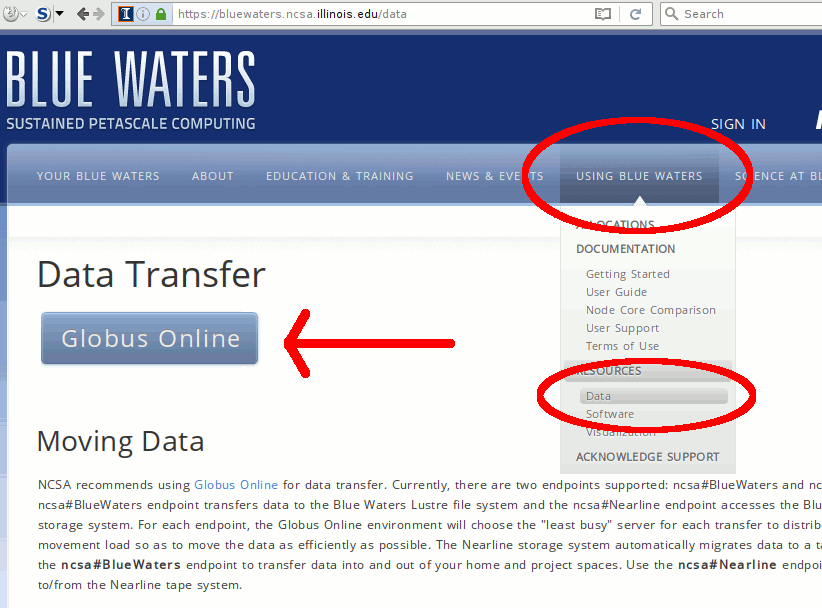

How to use Globus OnlineSign in to the Blue Waters Data Movement portal to transfer data between sites by using the web interface. To get there, go to the Blue Waters portal (bluewaters.ncsa.illinois.edu), mouse over the "Using Blue Waters" tab and click on the "Data" button to go to the Data Transfer page. The "Globus Online" button on the top of that page takes you to the Globus Online user interface:

If you don't have a Globus Online account, see our page about how to create one. If you have a Globus Online account and it's linked to your Blue Waters portal account, you will see the following screen. Click on the "Proceed" button to authenticate with your Blue Waters credentials.

On the next screen, enter your username and then passcode (PIN plus token code) just like when logging into the portal or into the system:

Once you've logged into Globus Online, you see the "Transfer Files" screen:

which allows you to transfer files between Globus Online endpoints. If you need to transfer files from a system that isn't yet a G.O. endpoint, you set it up to be one with the Globus Connect software:

Only transfer complete files Please only initiate transfers within Globus Online sourcing from files that have been written to disk and closed by the application. If GO is trying to transfer a file from Lustre that is being written to by a user application, then it gets confused about where it is in the file and tries to transfer the file multiple times. This uses up a lot of extra resources and doesn't gain anything for the user. It's faster to transfer files once they're done being written. Lustre StripingPreviously, when a file was transferred to a Blue Waters Lustre file system (home, scratch, projects) using GO, the file would use the system default stripe settings (stripe count 1, stripe size 1 MB). As of October 2013, this has been patched. Incoming files now inherit the stripe count, stripe size, and stripe offset settings of the directory in which they are placed by GO. The stripe count for a particular file will be revised higher, if necessary, for very large files. Command Line Interface (CLI)The ssh-based CLI was deprecated August 1, 2018. We are packaging the globus-cli on Blue Waters as guided by Globus. Please check back for updates.Self-deployment of Python-based Globus CLITo self-deploy the new Python-based Globus CLI, follow these 7 steps: module load bwpy virtualenv "$HOME/.globus-cli-virtualenv" source "$HOME/.globus-cli-virtualenv/bin/activate" pip install globus-cli deactivate export PATH="$PATH:$HOME/.globus-cli-virtualenv/bin" globus login You are now logged into Globus and can intiate queries and transfers. For example find the Blue Waters endpoint:> globus endpoint search BlueWaters ID | Owner | Display Name ------------------------------------ | --------------------- | ----------------------- d59900ef-6d04-11e5-ba46-22000b92c6ec | ncsa@globusid.org | ncsa#BlueWaters c518feba-2220-11e8-b763-0ac6873fc732 | ncsa@globusid.org | ncsa#BlueWatersAWS You can then activate an endpoint and list content: > globus endpoint activate d59900ef-6d04-11e5-ba46-22000b92c6ec The endpoint could not be auto-activated. This endpoint supports the following activation methods: web, oauth, delegate proxy For web activation use: 'globus endpoint activate --web d59900ef-6d04-11e5-ba46-22000b92c6ec' > globus endpoint activate --web d59900ef-6d04-11e5-ba46-22000b92c6ec Web activation url: https://www.globus.org/app/endpoints/d59900ef-6d04-11e5-ba46-22000b92c6ec/activate Cut-n-paste the URL into a browser and authenticate against NCSA two-factor. Now you can list the endpoint.

> globus ls -l d59900ef-6d04-11e5-ba46-22000b92c6ec:${HOME}

Permissions | User | Group | Size | Last Modified | File Type | Filename

----------- | ------ | --------- | ---------- | ------------------------- | --------- | -------------

0755 | gbauer | bw_staff | 4096 | 2018-03-14 23:20:16+00:00 | dir | 5483992/

0755 | gbauer | bw_staff | 4096 | 2018-03-15 04:42:23+00:00 | dir | 5722562/

0755 | gbauer | bw_staff | 4096 | 2018-03-15 03:45:23+00:00 | dir | 6210934/

0755 | gbauer | bw_staff | 4096 | 2012-06-06 01:16:33+00:00 | dir | XYZ/

0755 | gbauer | bw_staff | 4096 | 2016-11-18 19:06:53+00:00 | dir | 123/

Please see https://docs.globus.org/cli/ for more commands and examples.

More on CLIMoving Files Files can be moved on Nearline using the Pattern Matching This is an example script demonstrating a method to transfer only files of a given pattern. NOTE, for N files, the script would generate N transfers.

Important CLI Notes

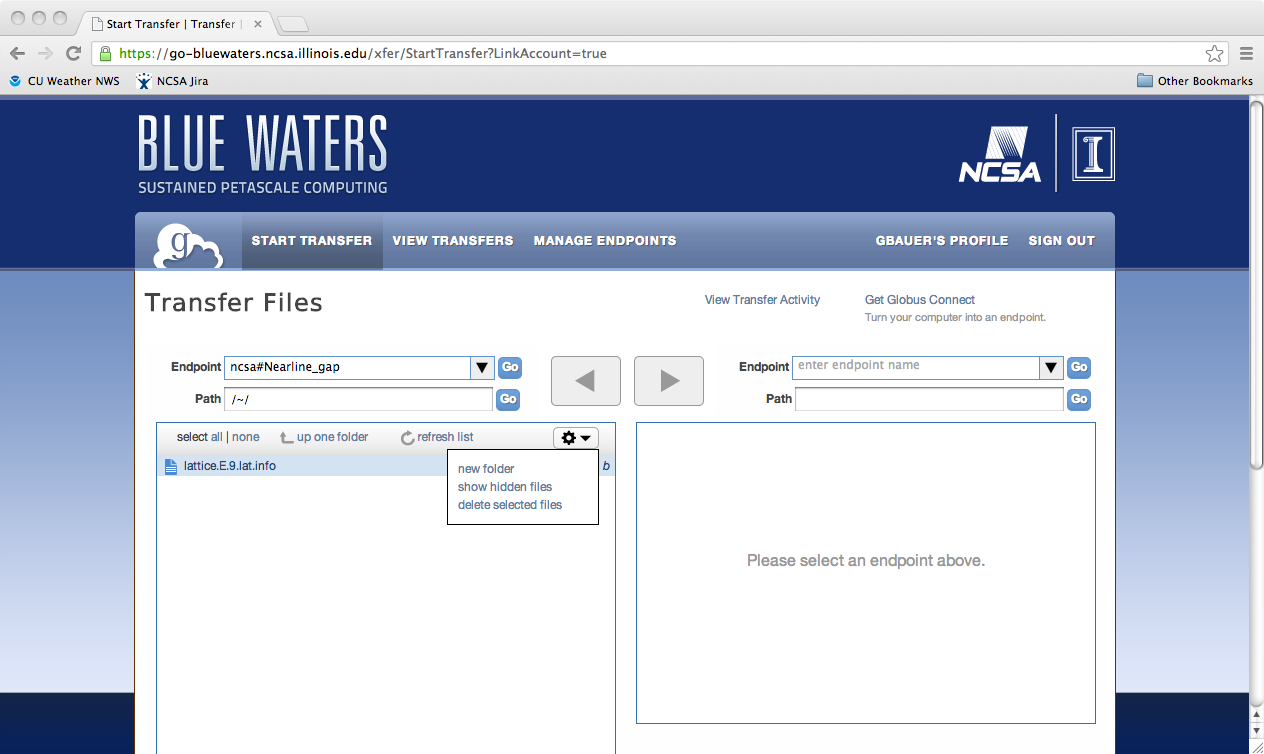

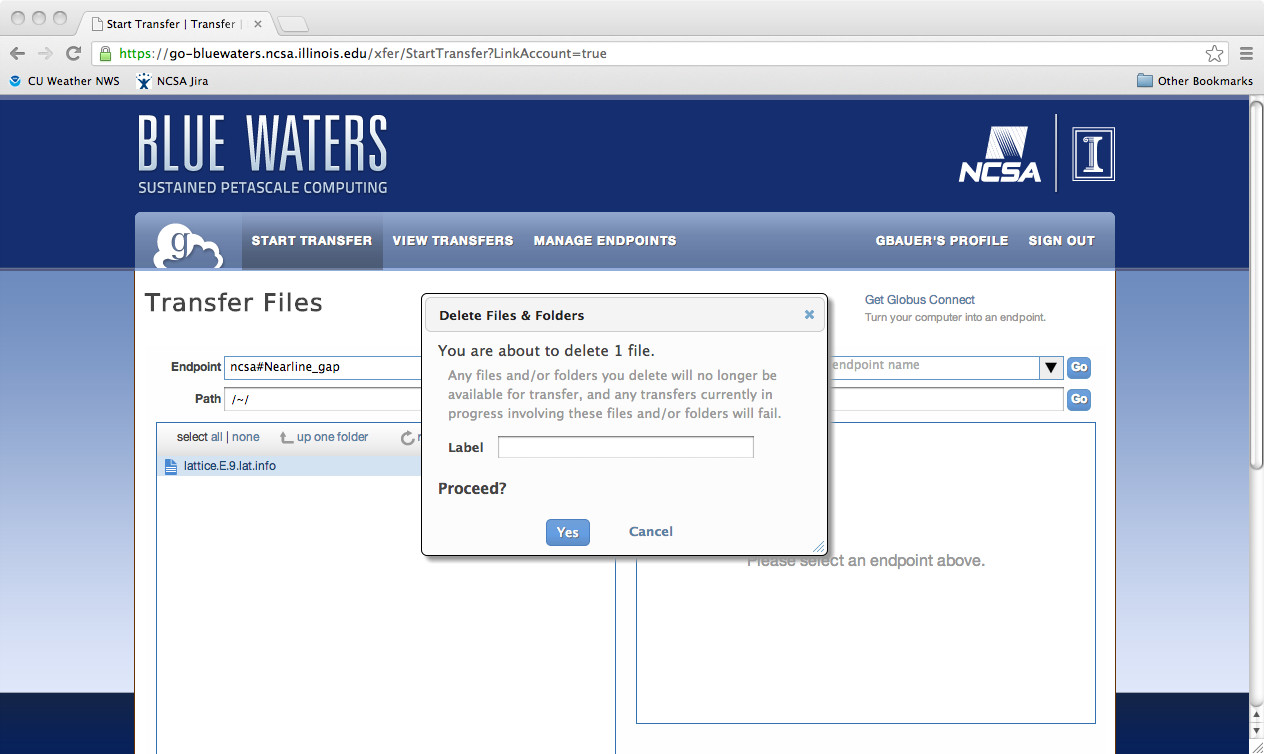

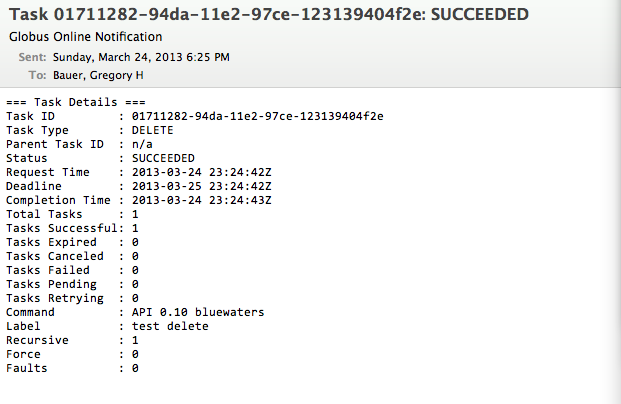

globus help Deleting a File, Folder, or DirectoryTo delete files using Globus Online from a endpoint, you first need to login into Globus Online and select the enpoint you wish to delete from, by first following the proceedure for transfereing a file. Next, you need to select the object to delete, click on the action "wheel" triangle and select "delete selected files". You will then be asked to confirm the process and provide a label for future reference (optional). Click on "Yes" when ready. The object to be deleted will then get set with a line across it as is shown in the next image. If you have the option set to receive an email for every transaction you should see the following notice appear in your email once the action is completed.

File Aggregation for transferBlue Waters' Nealine system will not able to retrieve hundreds of files from the storage tapes as the files will have been scattered over many tapes, each of which needs to be rewound to the correct location for every single file. Instead you must aggregate tiny files into chunks of approximately 10s of GBs in size.

First create a list of directories with files to archive: h2ologin$ cat >files-to-archive.txt <<EOF then obtain an interactive session and run tar h2ologin$ qsub -I -l nodes=1:xe:ppn=32 -l walltime=2:0:0 which will use up to 32 cores on a compute node to create up to 32 tar files in parallel producing tar files simulation1.tar.gz simulation2.tar.gz etc. Similarly you can use the same list of files to extract the resultant tar files in parallel h2ologin$ qsub -I -l nodes=1:xe:ppn=32 -l walltime=2:0:0 These can be encapsulated in a convenient shell script: #!/bin/bash which can be used like so bash aggregate.sh simulation1 simulation2 input-data code Data Compression and transfer discussionThere are a variety of factors to consider when deciding whether or not to compress data. For data that compress at high ratios (ascii text, highly structured binary data ) the space savings alone may warrant compression. In other cases, it may help to do some analysis before moving a large amount of data in order to accomplish the move in the least time. To help with your decision, it's useful to take a few measurements. The examples and model below were developed with gzip for compression (other utilities like zip, bzip, compress are available on many unix systems) and globus-online for the transfer mechanism. See the detail log for specifics. 1) The bandwidth between your site and NCSA may be determined by a tool like perfsonar. For many Xsede resource providers, a table is available at: http://psarch.psc.xsede.org/maddash-webui/ . Alternatively, you may run your own bandwidth test to our endpoint from a browser by loading: http://ps.ncsa.xsede.org , or you may use one of the well known bandwidth testing applications available online to get your baseline bandwidth to the internet. We'll call this bandwidth : xfer_bandwidth (MB/s unless otherwise indicated). 2) When compressing your data, make a note of how long it takes (use the time command on a unix system). You may then calculate the compression bandwidth as : (raw_size / compression_time). We'll name the compression bandwidth : compression_bandwidth=(raw_size/compression_time). It may be useful to compress a smaller representative file to get this number if the workflow will be moving extremely large files. 3) The compression ratio for your data will be the size of the raw data divided by the size of the compressed data (this should be a number >= 1 ). This variable will be : compression_ratio=(raw_size/compressed_size). With the measurments above, we can develop a simple procedure to help us determine when compression will save time with respect to data transfers. The goal is to compare these 2 expressions:

With compression, the effective bandwidth is amplified and becomes compression_ratio*xfer_bandwidth and the second term represents time spent compressing data. The smaller quantity in seconds will move your data in the least time. You may still opt to compress if saving space is the overriding concern. Here we look at a couple of example scenarios: Scenario 1: gigabit ethernet enabled laptop moving a 1 gigabyte file to Blue Waters 1015MB / (112MB/s) vs. 1015MB / [(1015MB/775MB )*112MB/s] + 1015MB / [1015MB/118 s] 9s vs. ( 7 + 118 )s Rule of thumb: With high bandwidth end-to-end networking (gigabit ethernet and better), compression will add time to your transfers. Until compression algorithms can run at much higher bandwidths, this will continue to hold. Scenario 2: wifi enabled laptop moving an approx. 1 gigabyte file to Blue Waters 1015MB / (3.75MB/s) vs. 1015MB / [(1015MB/775MB )*3.75MB/s] + 1015MB / [1015MB/118 s] 270s vs. ( 206 + 118 )s Rule of thumb: With end-to-end bandwidth near 100baseT speeds of 100Mbit/s, compression is more of a toss-up with respect to overall transfer speed. Other factors may come into play like the consistency of the xfer_bandwidth. The compression_ratio becomes more important and may be the dominant factor. Scenario 3: broadband cable connection working from home and transfer of small source code directory tree 6.8MB / (0.11MB/s) vs. 6.8MB / [(6.8MB/2.4MB])*.11MB/s] + 6.8MB / [6.8MB/0.5s ] 62s vs (22 + 0.5 )s Rule of thumb: With low bandwidth links (home broadband, hotel lobby...) , the compression_bandwidth is typically higher than the xfer_bandwidth and compressing saves both time and space: win-win. This is changing as commodity networks are upgaded, and watch out for asymmetric bandwidths. On the test setup for this scenario, the download bandwidth was 3x the upload bandwidth so compression may save time in one direction and cost time in the other. The simplified model and discussion ignores a few factors:

Performance ConsiderationsWe recommend users to transfer fewer but larger files. If a transfer attempts to move tens to hundreds of thousands of small (<1GB) files, performance will suffer due to file staging overhead. In such cases, It is best to use tar to form larger files of size tens or hundreds GB before starting a GO transfer. Globus Transfers to AWS S3Any user desiring to make use of the Globus AWS S3 connector needs to have a file created in their home directory that specifies the S3 credentials associated with their local user account. The configuration for the AWS S3 Connector is stored in $HOME/.globus/s3. This file provides a mapping of the current user’s ID to S3 access keys. This file can be created and populated by the user using the following two commands:

The “S3_ACCESS_KEY_ID” and “S3_SECRET_ACCESS_KEY” correspond to the Access Key ID and Secret Access Key for the user’s S3 credentials that have been granted access to the S3 bucket(s) the user intends to access. These must be provided by the user. When complete, the file should contain 3 fields, separated by semi-colons, that look something like:

See https://docs.globus.org/premium-storage-connectors/aws-s3#s3-credentials-anchor for more information on identity credentials. The name of the S3 enabled endpoint is ncsa#BlueWatersAWS. Globus Transfers to Google DriveGoogle G Suite for Education accounts provide unlimited Google Drive storage, subject to a 750 GB per day upload limit. The maximum size per single file is 5 TB, and the upload limit is only enforced on new transfers, so it is possible to upload, e.g., four 4 TB files simultaneously. Observed upload speeds are around 30 MB/s, so it may not be possible to upload more than 2.5 TB per day. Download speeds are much better. Users with Google accounts in domains other than illinois.edu must email help+bw@ncsa.illinois.edu to request access. The Blue Waters Google Drive endpoint may be accessed via https://go-bluewaters.ncsa.illinois.edu/endpoints?q=BW%20Google%20drive&scope=all. To use the Google Drive endpoint you must first create a new collection. Click on "BW Google Drive Endpoint", then "Collections", then "Add a Collection", select "NCSA" as your organization, click "Continue", and sign in with your Blue Waters username and PIN+tokencode. The "Create a Guest Collection" form is mostly self-explanatory, but the "Base Directory" documentation incorrectly states that the path / contains My Drive etc., when in fact / is equivalent to My Drive. It is strongly recommended that you define a Base Directory to limit Globus Online access to your Google Drive, but do not begin the Base Directory path with "/My Drive" as suggested, as this will produce a "Directory Not Found" error in the Globus Online File Manager; rather specify the path to the Base Directory treating My Drive as root (/). Additional Information / References

|

Skip to Content